Multi-Model Proxy

Release Time 2025-03-03

Scene Description

The AI gateway can forward external calls to different large models through a unified invocation method to the corresponding large models internally, making backend model scheduling more flexible. Higress AI Gateway supports the unified protocol conversion of over 100 commonly used models and also supports model-level fallback.

During the evaluation of large models, the multi-model proxy function can construct a unified dataset, forwarding model requests to backend models to verify the effectiveness of the models. Combined with observability plugins, it can clearly track the chains of different models.

Deploy Higress AI Gateway

This guide is based on Docker deployment. If you need other deployment methods (such as k8s, helm, etc.), please refer to Quick Start。

Execute the following command:

curl -sS https://higress.cn/ai-gateway/install.sh | bashNote: During start-up, AI Gateway needs to access Internet resources. Please make sure its runtime environment has proper Internet access.

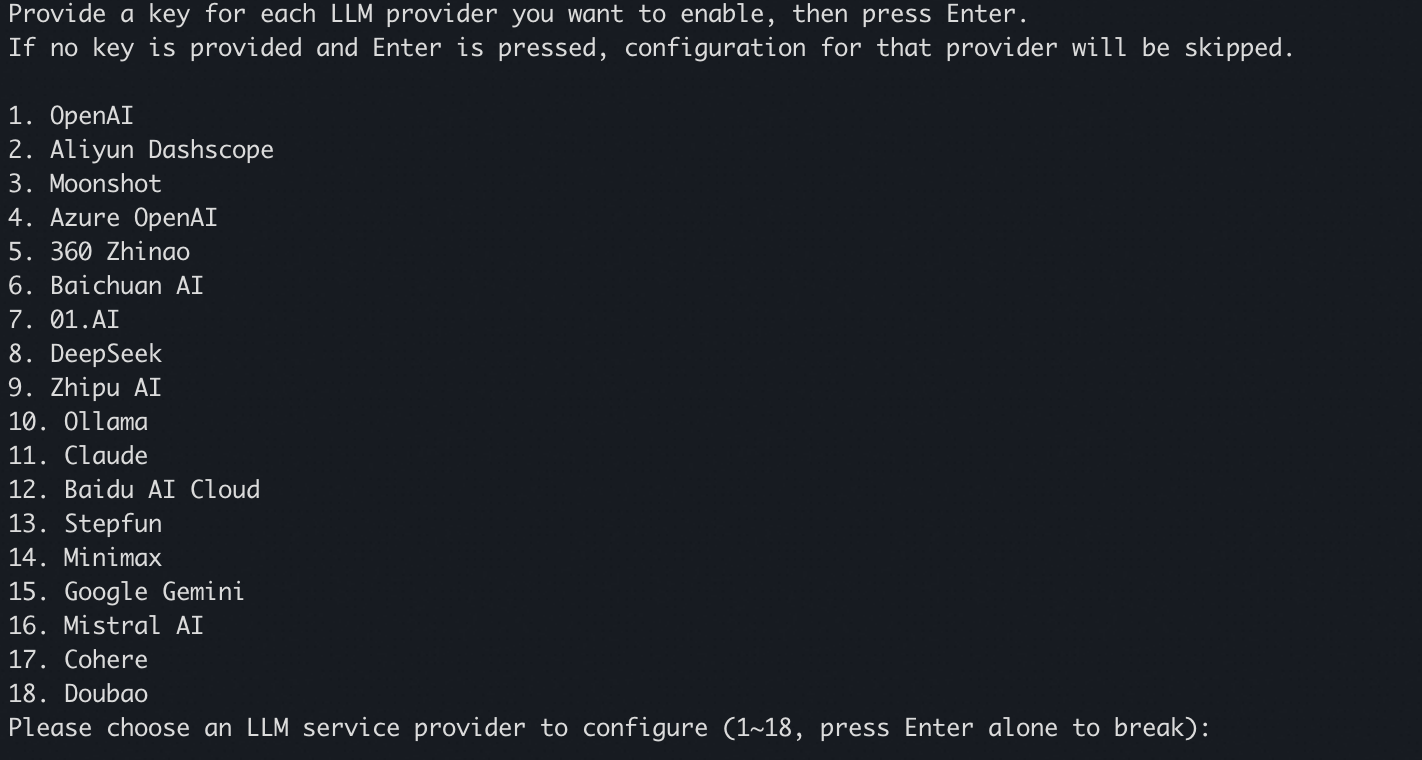

Follow the prompts to enter the Aliyun Dashscope or other API-KEY; you can also press Enter to skip and modify it later in the console. You can also press Enter to skip and modify it later in the console.

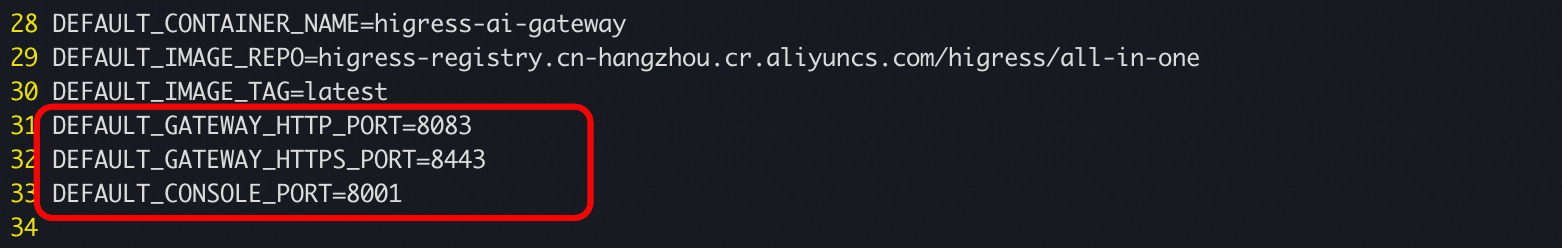

The default HTTP service port is 8080, the HTTPS service port is 8443, and the console service port is 8001. If you need to use other ports, download the deployment script using wget https://higress.cn/ai-gateway/install.sh, modify DEFAULT_GATEWAY_HTTP_PORT/DEFAULT_GATEWAY_HTTPS_PORT/DEFAULT_CONSOLE_PORT, and then execute the script using bash.

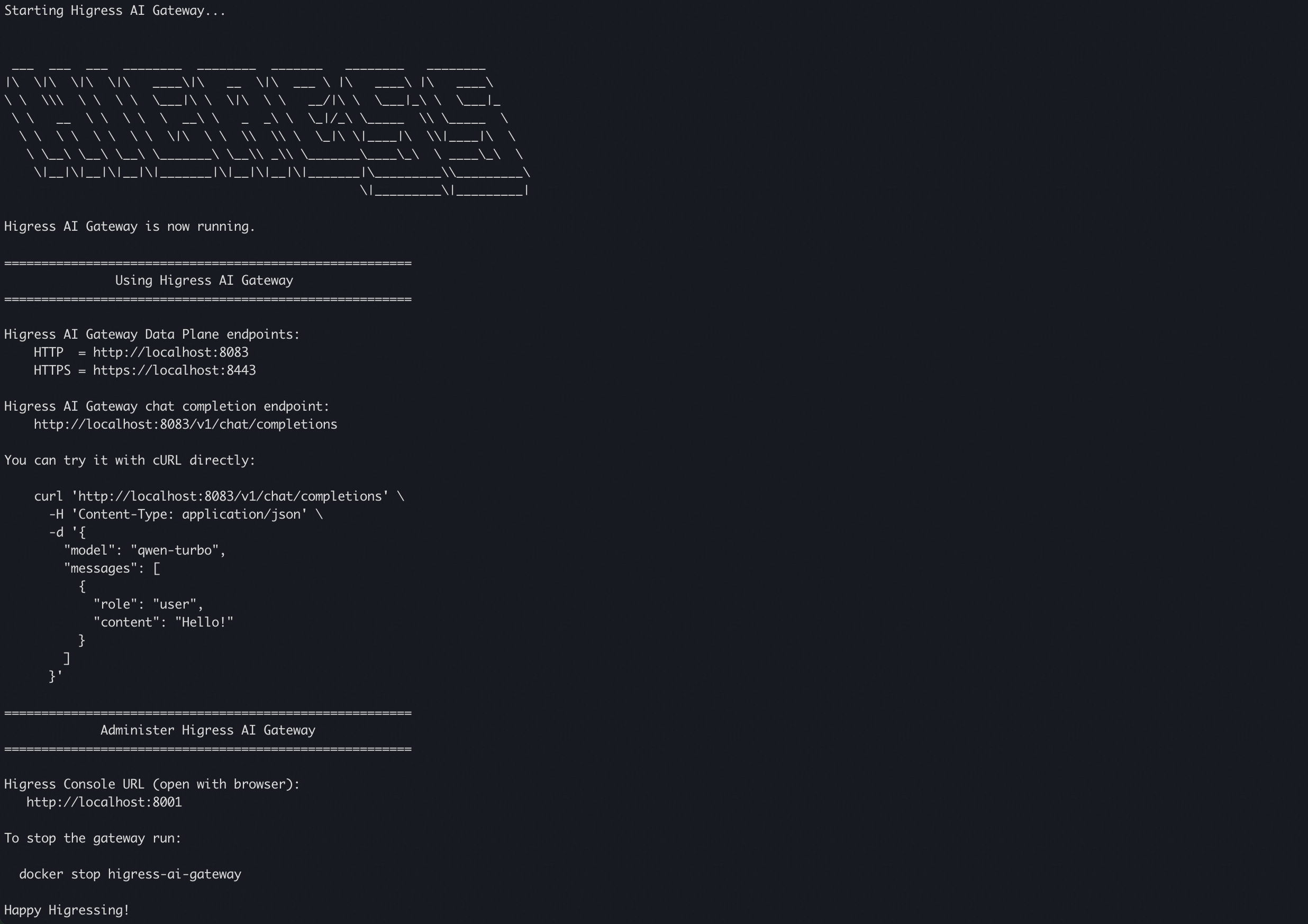

After the deployment is completed, the following command display will appear.

Console Configuration

Access the Higress console via a browser at http://localhost:8001/. The first login requires setting up an administrator account and password.

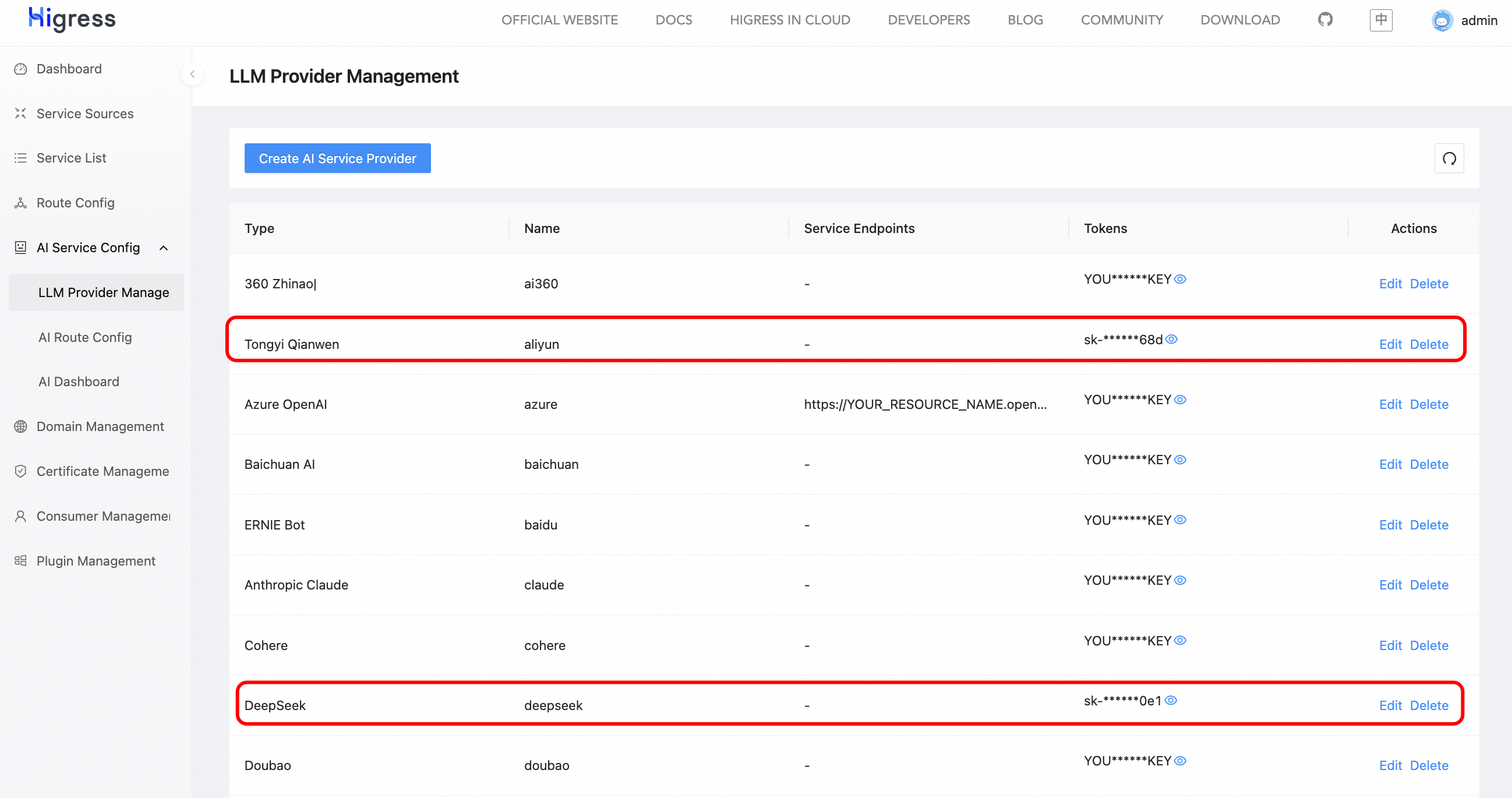

In the LLM Provider Management, you can configure the API-KEYs for integrated suppliers. Currently integrated suppliers include Alibaba Cloud, DeepSeek, Azure OpenAI, OpenAI, DouBao, etc. Here we configure multi-model proxies for Tongyi Qwen and DeepSeek.

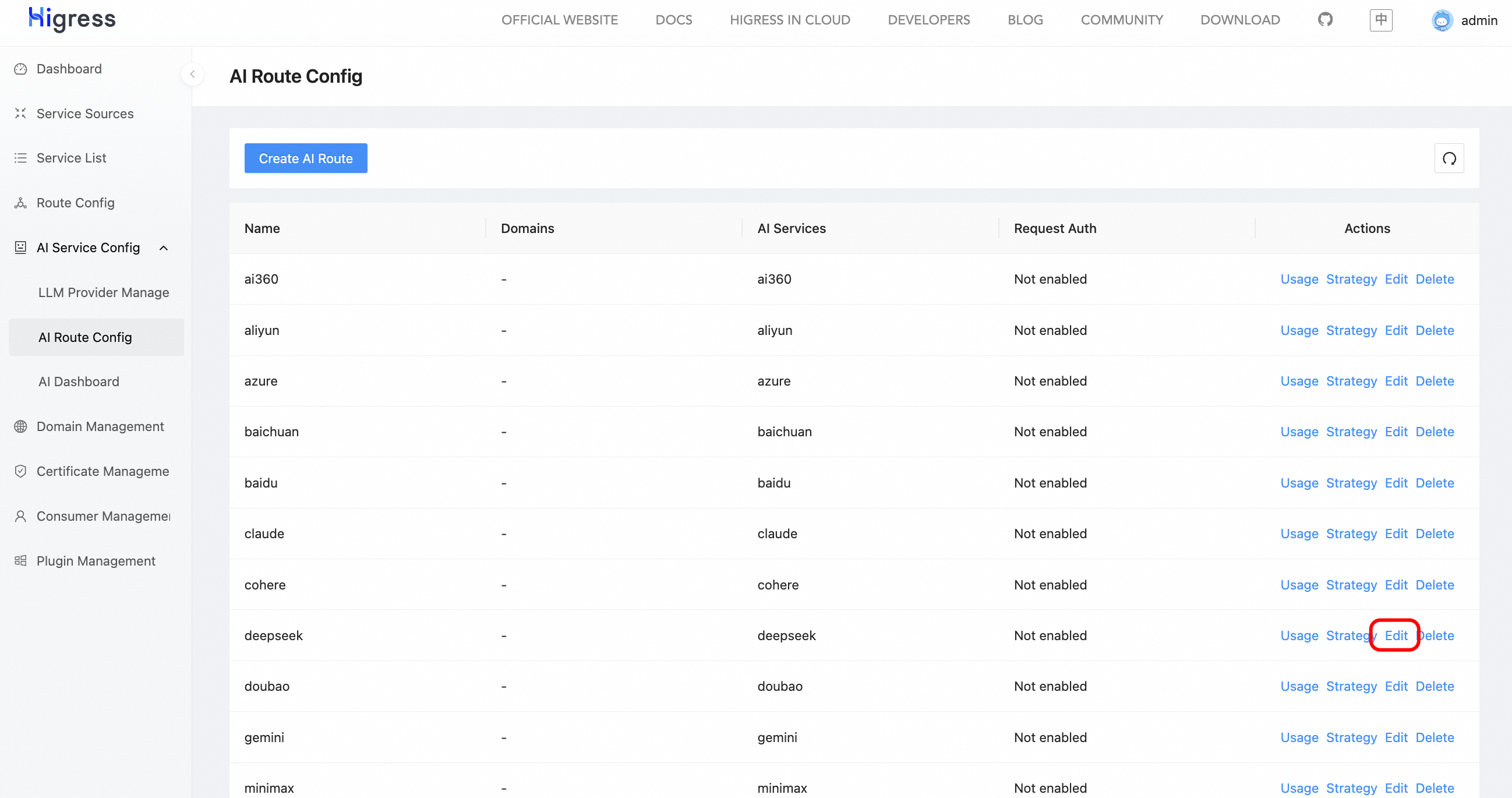

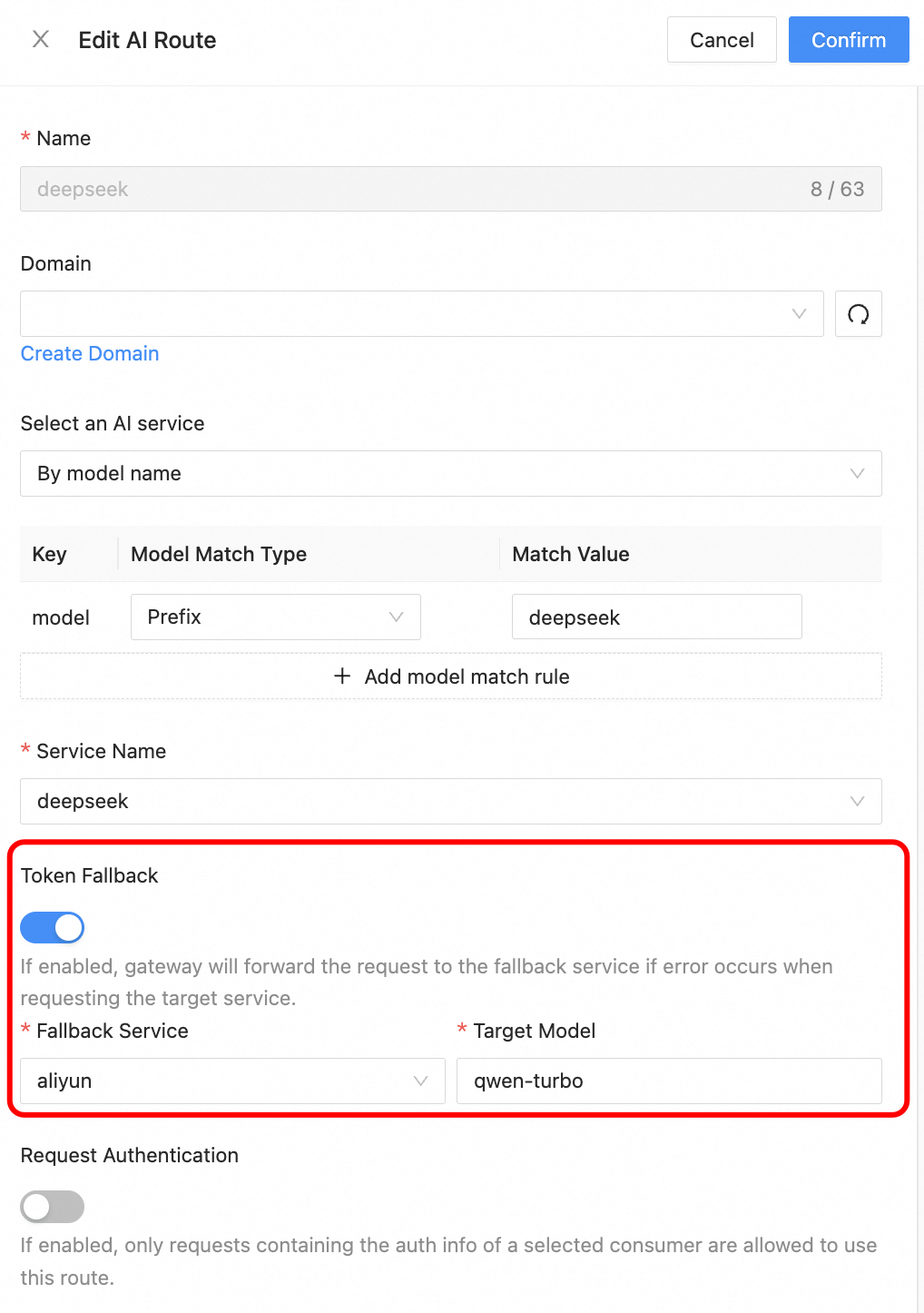

In the AI Route Config, configure the fallback settings for the DeepSeek route. When the request to the target service fails (e.g., due to rate limiting or access failure), it will fallback to the Alibaba Cloud qwen-turbo model.

Debugging

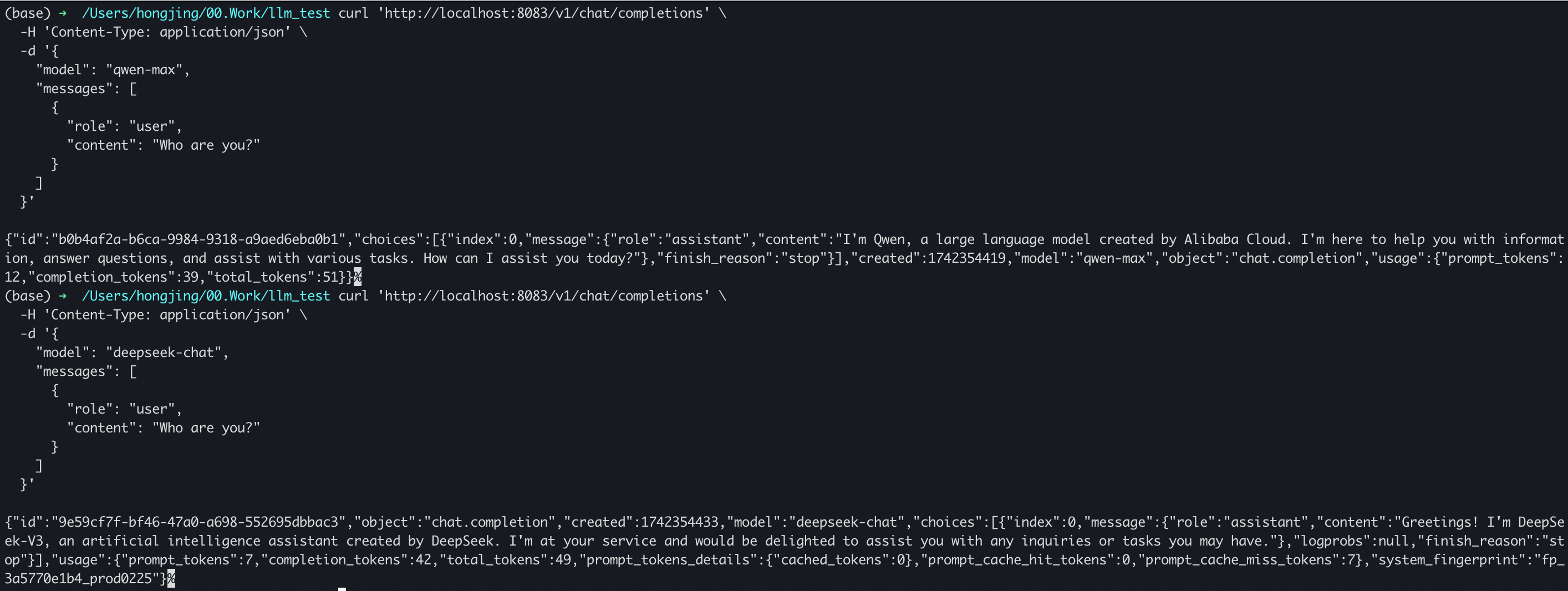

Open the system’s built-in command line and send a request using the following command (if the HTTP service is not deployed on port 8080, modify it to the corresponding port):

curl 'http://localhost:8080/v1/chat/completions' \ -H 'Content-Type: application/json' \ -d '{ "model": "qwen-max", "messages": [ { "role": "user", "content": "Who are you?" } ] }'Sample response:

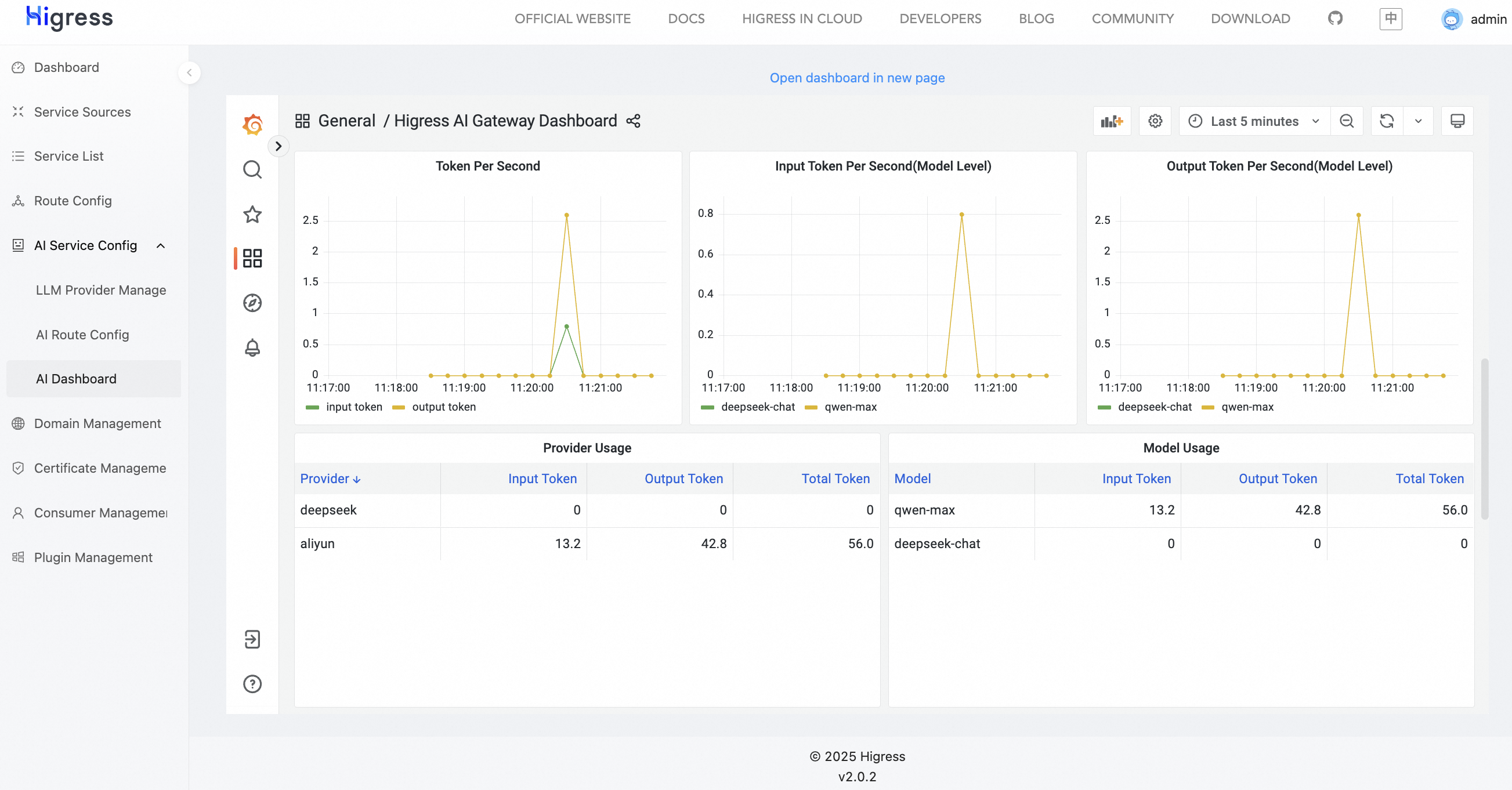

Observability

In the AI Dashboard, you can observe AI requests. Observability metrics include the number of input/output tokens per second, token usage by each provider/model, etc.

If you encounter any issues during deployment, feel free to leave your information in the Higress Github Issue.

If you are interested in future updates of Higress or wish to provide feedback, welcome to star Higress Github Repo.